A Primer on AI Data Centers

An overview of the history, technology, and companies exposed to the AI data center buildout

“As one financier observed at the time, the amount of money required by the burgeoning US electrical system was “bewildering” and “sounded more like astronomical mathematics than totals of round, hard-earned dollars.”” - [A description of the electric grid buildout around 1900] Power Loss, Richard Hirsh 1999, via Construction Physics

We’re currently in the midst of one of the largest computing infrastructure buildouts in history.

100+ years ago, we saw a similar buildout of the electric grid (which ironically is a bottleneck for today’s buildout). Throughout the birth of the electric grid, we saw the scaling of power plants (building power plants as large as possible to capture performance improvements), “Astronomical” CapEx investments, and the plummeting cost of electricity.

Today, we’re seeing the scaling of data centers, “Astronomical” CapEx from the hyperscalers, and the plummeting cost of AI compute:

This will be an introductory piece to a several part series breaking down the AI data center: what exactly it means, who supplies the components into the data center, and where opportunities may lie.

This piece will be specifically focused on the infrastructure required to build AI-specific data centers; for an introductory piece on data centers, I recommend reading this piece I published a few months back.

1. An Overview of the AI Data Center

The term “data center” doesn’t do justice to the sheer scale of these “AI Factories” (as Jensen affectionately refers to them). The largest data centers cost billions of dollars across land, electrical & cooling equipment, construction costs, GPUs, and other computing infrastructure.

That’s before the energy costs. The new, largest hyperscaler data centers will consume up to 1 GW of energy capacity. For reference, New York City consumes about 5.5 GW. So, for every five of these mega data centers, we’re adding an equivalent NYC to the grid.

We can broadly break down the data center value chain into a few categories: the initial construction to develop a data center, the industrial equipment to support the data center, the computing infrastructure in a data center, and the energy required to power the data center. Additionally, we have companies that own or lease data centers to provide end services to consumers.

We can visualize the value chain here:

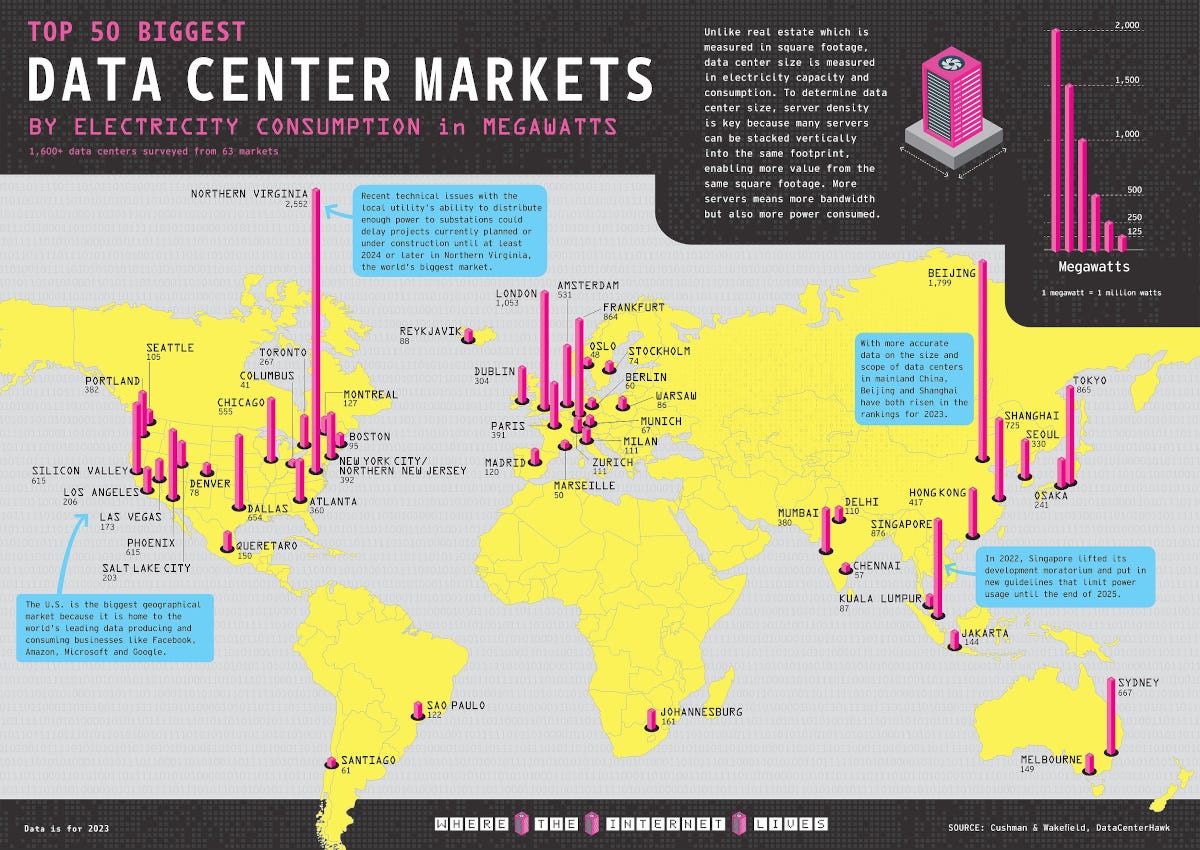

Before diving deeper, we should look at the history of data centers (which is especially relevant to the energy crunch we’re seeing today, specifically in the Northern Virginia region).

2. An Abridged History of Data Centers

Data centers, in large part, have followed the rise of computers and the rise of the internet. I’ll briefly discuss the history of these trends and how we got here.

Early History of Data Centers

The earliest versions of computing look similar to data centers today: a centralized computer targeted at solving a compute-intensive and critical task.

We have two early examples of this:

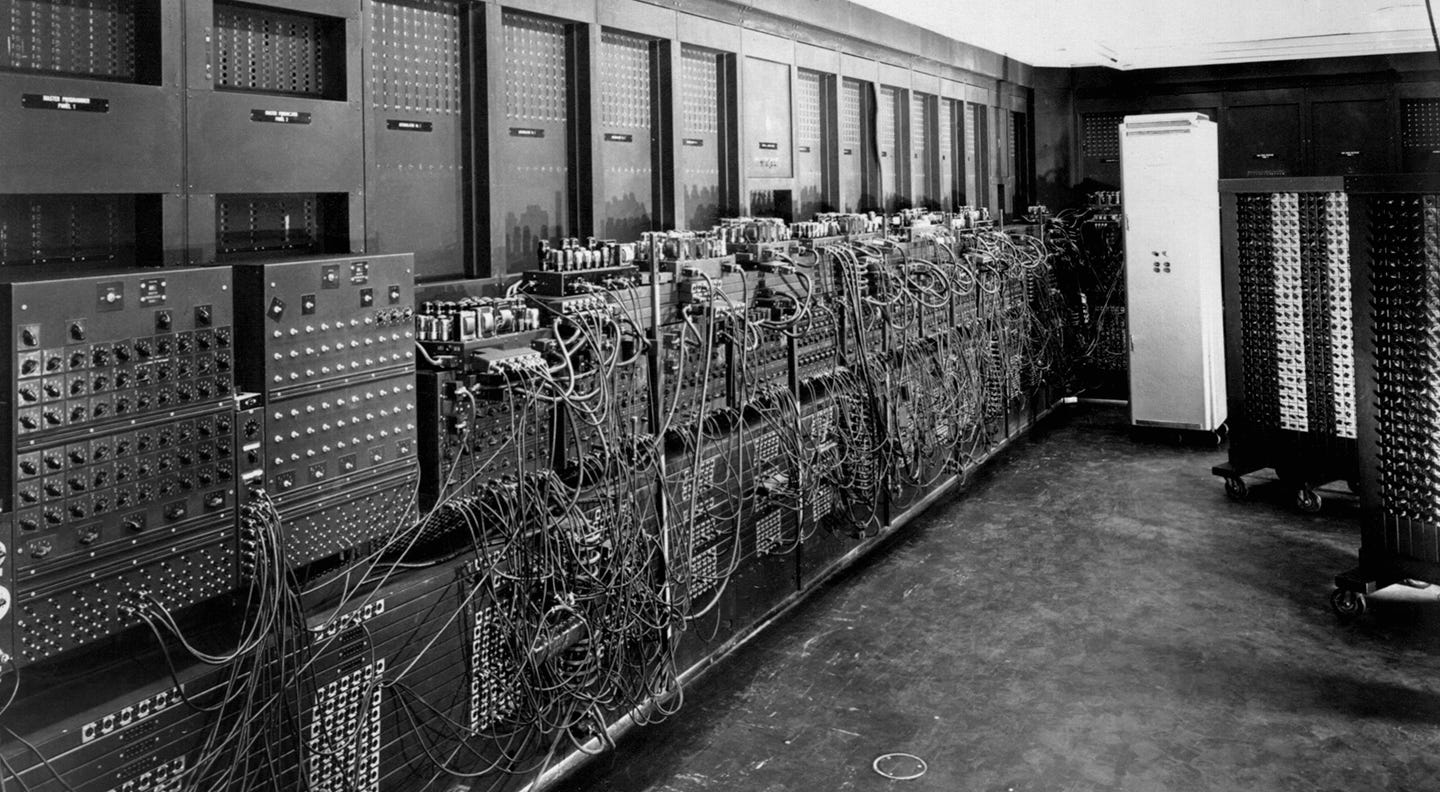

The Colossus - The computer built by Alan Turing to break the Enigma machine. (Note: Turing is also considered the father of Artificial Intelligence and Computer Science. He proposed the Turing Test as a means of testing if AI is real, which ChatGPT passed last year).

The ENIAC - The computer designed in WW2 by the US Military but not completed until 1946. The Colossus was built before the ENIAC, but the ENIAC is often considered the first computer because of the confidential nature of the Colossus.

Both were housed in what could be considered the “first data centers.”

https://www.simslifecycle.com/blog/2022/the-journey-of-eniac-the-worlds-first-computer/

In the 1950s, IBM rose to dominate computing with the mainframe computer. This would lead to their multi-decade dominance in technology, with AT&T being another of the dominant technology companies of the time.

The ARPANET, released in 1969, was developed as a way to connect the growing amounts of computers in the US. It’s now considered the earliest version of the internet. Given that it was a government project, its densest connections were around Washington, DC.

This was the root of Northern Virginia’s computing dominance. As each new generation of data centers was built, they wanted to use the existing infrastructure in place. That happened to be in the Northern Virginia region and still is today!

https://www.visualcapitalist.com/cp/top-data-center-markets/

The Rise of the Internet & the Cloud

In the 1990s, as the internet grew, we needed more physical infrastructure to process the increasing amount of Internet data. In part, this came in the form of data centers as interconnection points. Telecom providers like AT&T had already built out communications infrastructure, so it was a natural expansion for them.

However, these telecom companies had a similar co-opetition dynamic to vertically integrated cloud providers today. AT&T owned the data being passed through their infrastructure and the infrastructure itself. So, in the event of limited capacity, AT&T would prioritize its own data. Companies were wary of this dynamic, which led to the rise of data center companies like Digital Realty and Equinix.

Data centers saw massive investment throughout the dot com bubble, but that slowed significantly once the bubble burst (a lesson we should keep in mind when extrapolating data).

Data centers saw their slump start to reverse in 2006 with the release of Amazon Web Services. Since then, data center capacity has mostly steadily grown in the US.

Enter AI Data Centers

That steady growth continued until 2023, when the AI frenzy grabbed hold of us. Estimates now show data center capacity doubling by 2030 (keep in mind, these are estimates).

The unique workloads of AI training led to a renewed focus on data center scale. The closer computing infrastructure is together, the more performant it can be. Additionally, when data centers are designed as units of compute and not just rooms of servers, companies can get additional integration benefits.

Finally, since training doesn’t need to be close to end users, data centers can be built anywhere.

Summarizing AI data centers today: they’re focused on size, performance, cost, and they can be built anywhere.

3. What does it Take to Build an AI Data Center?

Building an AI Data Center

A compute provider (hyperscaler, AI company, GPU cloud) will either build the data center themselves or will work with a data center developer like Vantage, QTS, or Equinix to find a plot of land with energy capacity.

They’ll then hire a general contractor to manage the construction process who will hire subcontractors for each function (electrical, plumbing, HVAC) and purchase raw materials. Laborers will then move to the area for as long as the project is running. After “the shell” of the building is put up, the next step is installing equipment.

https://blog.rsisecurity.com/how-to-build-and-maintain-proper-data-center-security/

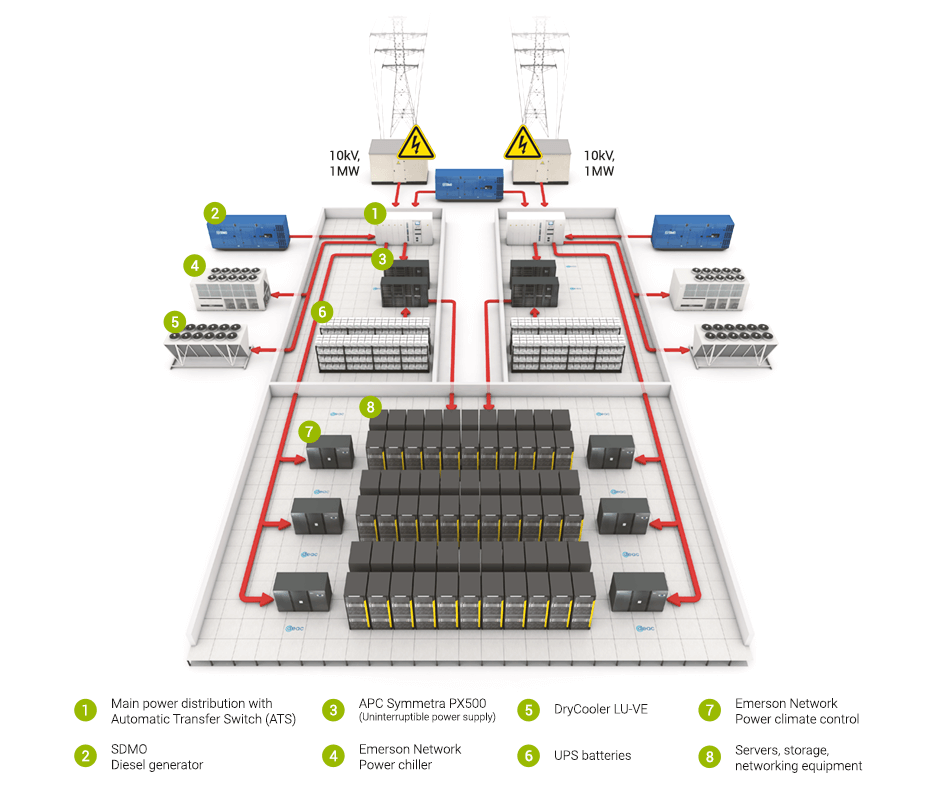

Data center industrial equipment can broadly be broken down into electrical and cooling equipment. Electrical equipment starts with a main switchgear that connects to external energy sources. That then connects to power distribution units, uninterruptible power supplies (UPSs), and the cabling that connects to the server rack. Most data centers will also have diesel generators as backups in power outages.

The second category is mechanical and cooling equipment. This includes chillers, chilling towers, HVAC equipment, and liquid or air cooling that connects to the servers themselves.

2. Computing in an AI Data Center

Computing infrastructure includes the equipment running AI’s training and inference workloads. The primary equipment is the GPUs or accelerators. In addition to Nvidia, AMD, and the hyperscalers, there are a host of startups racing for a piece of the AI accelerator pie:

While less important than in the past, CPUs still play an important role in completing complex operations and “delegating” tasks. Storage stores data separate from the chip while memory will store data that needs to be constantly accessed. Networking then connects all of the components, both within the server and outside the server.

Finally, all of this is packaged in a server to be installed in a data center. We can visualize one of those servers here (note: storage will be external).

3. Powering an AI Data Center

The energy supply chain can broadly be broken down into:

Sources - Fossil fuels, renewables, and nuclear that can generate power.

Generation - Power plants turn fossil fuels into electricity; for renewables, this happens much closer to the source.

Transmission - Electricity is then transmitted via high-voltage lines close to the destination. Transformers and substations will take high-voltage energy and knock it down to be manageable for consumption.

Utilities/Distribution - Utilities will manage the last mile distribution and manage the delivery of power through power purchase agreements (PPAs).

The transmission and distribution is what’s commonly referred to as “the electric grid”, and are managed locally. Depending on the location, either one can be a bottleneck to energy delivery.

Energy is proving to be a key bottleneck the AI data center buildout.

Unfortunately, there’s no easy way to increase energy capacity quickly. Data centers have two options: on-grid energy and off-grid energy. On-grid energy passes through the electric grid and is doled out by utilities. Off-grid energy bypasses the electric grid, such as on-site solar, wind, and batteries. Or even better, building a GW data center next to a 2.5 GW nuclear power plant!

The problem with on-grid energy is the time it takes to expand grid capacity; see below for transmission wait times below from request to commercial operations. (This refers to the time from an energy source applying for transmission capacity to actually being in use).

Inevitably, it will take a combination of solutions to address these challenges. More on this in the final section.

4. What’s new about AI Data Centers?

This new generation of data centers is bigger, denser, faster, and more energy-demanding.

The “super-sizing” of data centers is not new. You can find articles every few years about the supersizing of data centers, from a few MW in 2001, to 50MW in the 2010s, to “massive 120 MW” data centers in 2020, to the gigawatt data centers of today.

These GW data centers are denser as well, being designed from a systems-perspective. The core problem being solved here is the slowing of Moore’s Law, the idea that semiconductors will continually improve in performance as transistor density increases. However, those transistor improvements are becoming increasingly challenging. So, the solution is to bring the server, and even the entire data center, closer together.

In practice, that means data centers are being designed as integrated systems instead of rooms full of individual servers. Those servers are also being designed as integrated systems, bringing everything closer together.

This is why Nvidia sells servers and PODs, why the hyperscalers are building systems-level data centers, and presumably why AMD acquired ZT systems.

We can see a visual of Nvidia’s DGX H100 below, which can be a standalone server, or connected in PODs to other GPUs, or connected in SuperPODs (!) for even more connections:

Nvidia has also helped pioneer “accelerated computing”, the offloading tasks from the CPU, which increases the importance of every other component, including GPUs, networking, and software.

On top of this, the unique needs of AI require processing massive amounts of data. This makes the ability to store increasingly large amounts of data (memory/storage) and move increasingly large amounts of data quickly (networking) even more important. This is similar to the heart pumping blood, with GPUs being the heart and data being the blood (This is why Google’s TPUs’ architecture is called a systolic array).

All of these trends align to form the most powerful computers on the planet. This computing power leads to more energy consumption, more heat creation, and more cooling required for each server. That energy consumption is only increasing (along with our appetite for compute power):

5. Bottlenecks & Beneficiaries

This is not an exhaustive list of beneficiaries, but it is the list I’m currently most interested in. The entire supply chain is stretched thin and I’ve heard anecdotes of bottlenecks ranging from the lack of skilled workers to build transformers to permitting automation.

1. Expanding (or Enhancing) the Electric Grid or Getting Around It

It’s quite clear that our energy infrastructure needs to develop to support this buildout. Almost every tech company would prefer access to on-grid power: it’s more reliable and less work to manage. Unfortunately, when that’s unavailable, hyperscalers are taking matters into their own hands. For example, AWS is investing $11B into a data center campus in Indiana, and building four solar farms and a wind farm to power it (600 MW).

Over the mid to long term, I’m most optimistic about two areas to address the energy bottleneck: nuclear and batteries. Both to provide more sustainable energy sources to data centers.

Nuclear pros are well documented: clean, reliable power. The challenge is figuring out how to build nuclear in an economically viable way. Some of the most exciting startups in the world (in my humble opinion) are tackling this challenge.

Long-duration battery innovation will be an important step forward for renewable energy. The problem with solar and wind is that they’re inconsistent; they only provide energy when it’s windy or the sun is out. Long-term batteries help manage this by storing energy when there’s an energy surplus and deploying energy when there’s scarcity.

2. Construction Permitting & Liquid Cooling

On the industrial side, two trends I’m excited about are permitting automation and liquid cooling. When I spoke to people researching this article, one topic consistently came up as a bottleneck for this buildout: permitting.

For data centers and energy expansion, developers need permits for building, environmental, zoning, noise, etc. They may need approval from local, state, and national agencies. This is in addition to navigating right of first refusal laws, which vary by location. For energy infrastructure, this is even more painful. Permitting software companies like PermitFlow are in a prime position to help ease these pains.

One of the clear differences in the new AI data centers is the increasing amount of heat generated from servers. The new generation of data centers will be liquid-cooled, and the next generation may be immersively cooled.

3. An Obligatory Hat Tip to the Computing Companies

It’s hard not to acknowledge (1) the incredible job Nvidia’s done building out their ecosystem and (2) the job AMD has done solidifying themselves as a legitimate alternative. It’s incredible how well-positioned Nvidia is for AI, from applications to software infrastructure to cloud, systems, and chips. If you script out the perfect playbook of preparing for a technology wave, Nvidia has played it.

Crusoe is another company very well-positioned for this buildout, offering both AI computing and energy services.

Finally, computing companies exposed to the data center buildout should continue to do well as revenue flows through the value chain. From networking, to storage, to servers; if a company provides top-tier performance, they’ll do well.

4. Final Thoughts

My final thought on the data center buildout is this: while it seems like a new trend, this is part of a broader history of the growth of computing. I don’t think AI, data centers, and computing should be different conversations.

As Sam Altman described:

Here is one narrow way to look at human history: after thousands of years of compounding scientific discovery and technological progress, we have figured out how to melt sand, add some impurities, arrange it with astonishing precision at extraordinarily tiny scale into computer chips, run energy through it, and end up with systems capable of creating increasingly capable artificial intelligence.

It’s no coincidence that Alan Turing is the father of modern computers, computer science, and AI. This has been one consistent trend over the last 100 years to create intelligence. And it’s one that data centers are at the heart of today.

As always, thanks for reading!

Disclaimer: The information contained in this article is not investment advice and should not be used as such. Investors should do their own due diligence before investing in any securities discussed in this article. While I strive for accuracy, I can’t guarantee the accuracy or reliability of this information. This article is based on my opinions and should be considered as such, not a point of fact. Views expressed in posts and other content linked on this website or posted to social media and other platforms are my own and are not the views of Felicis Ventures Management Company, LLC.

Having visited several data centers in Santa Clara recently and witness Pods of DGX H100s in action, with more racks being buildout to hold larger super-Pods, it’s evident that we are still on the early part of this AI Data Center growth trajectory with more to come. The biggest bottleneck locally in South Bay is the Moratorium on power. These energy challenges are mentioned in the article https://www.datacenterfrontier.com/energy/article/55089067/silicon-valley-utilities-gird-for-surging-us-energy-demand-fed-by-new-data-centers.

Great overview - Missing many many firms that do facility and infrastructure design before the GC gets the project started. But all good information otherwise!