Meta's AI Strategy

Zuck’s Vision and Why Meta’s Spending so Much on Talent

The book of AI mega-investments now has another chapter. This one starts with Meta’s acquisition of Alexander Wang and 49% of Scale AI for $14.3B. The pseudo-acquisition’s valuation would put it in four of the most valuable of the last decade (of companies founded this millennium):

If reports are true, Meta is hiring SSI CEO Daniel Gross, his partner (former GitHub CEO) Nat Friedman, and a stake of their venture firm. Meta also considered acquiring Ilya Sutskever’s SSI (last valued at $32B), Perplexity (last valued at $14B), and is offering 9-figure pay packages for AI researchers to join Meta’s superintelligence team (total spend could be up to $5B).

So, Meta was prepared to spend at least $30B, err let me rephrase, whatever it takes to be competitive at the leading edge of AI.

This is apparently in response to the underperformance of Meta’s model developments, from Stratechery:

The proximate cause for this reevaluation is the apparent five alarm fire that is happening at Meta: the company’s latest Llama 4 release was disappointing — and in at least one case, deceptive — pushing founder and CEO Mark Zuckerberg to go on a major spending spree for talent.

This will not be an analysis of whether or not these investments will work, but rather why they’re making these investments. Similar to my arguments on big tech’s CapEx investments, this is all logical. In summary, Meta has:

Personal motivations: Meta’s history of conflict with the platform they sit on top of (Apple) has led to a “do anything necessary” mentality to own the next big platform.

Profitable applications of AI: Meta has one of the most profitable applications of AI in the world (advertising systems) to justify ROI on these investments.

An unsatisfactory rate of progress: Meta’s not making the progress Zuck wants to in AI. Of the three largest paths towards model improvements (compute, data, and talent/algorithms), talent is the most differentiated today.

Incentives + no reasonable alternative: Meta has the applications to profit from AI (carrot) + a risk of relying on someone else for the next big platform shift (stick) + no reasonable investment alternative ($20B in profit last quarter!) = play the AI arms race.

If we squint, we can see the big vision for AI take form: a personal assistant at our fingertips, with context on our lives, that gets better as it becomes more powerful and more personalized. As it gets more integrated into our lives, it gets harder to replace.

The window to become that personal assistant is closing. This is the prize Meta is chasing, and the risk of underinvesting towards that vision is far greater than the risk of overinvesting.

This will be a rundown of Meta’s AI strategy, their motivations from past scars, the current state of their tech, and their vision for the future.

I. Some Historical Context

Three pieces of important historical context to understand Meta’s motivations:

Meta initially viewed themselves, and has always had the aspirations to be, a platform company.

This started with the Facebook platform in the early 2010s, enabling companies to build apps on top of Facebook’s ecosystem (you guys remember Farmville?). In the early days, the platform was just as important as advertising in the eyes of Zuck and the team.

However, as mobile took off, it became clear Facebook was a mobile application, but the mobile platform would be Apple’s. And that fact has caused Zuck as much stress as any other:

"There are all these things that, over time, Google—but especially Apple—have prevented us from doing, even though I think they would be good for consumers. At some point, we ran the numbers and realized that if we were able to do all the things we thought were beneficial—considering the additional engagement, increased app usage, and avoiding various fees—I think we might be twice as profitable as a company as we are now." - Zuck in a Stripe Sessions Interview (lightly edited for clarity)

To avoid that pain again, Meta made two opinionated bets on the platforms of the future in the early 2010s: AR/VR and AI.

For AR/VR, Meta acquired Oculus for $2B in 2014. As has been well-documented, they’ve spent well over $60 billion in pursuit of owning that platform.

For AI, they hired Yann LeCun in 2013, as well as other top AI researchers, and developed a near duopoly on top AI talent with Google. FAIR (now Meta AI) became one of the most respected AI research labs in the world.

Meta created one of the most profitable applications of AI of any company in the world.

Meta has profitably spent tens (perhaps hundreds) of billions of dollars on GPUs. Digital advertising is one of the most profitable businesses in history, and there are only three ways to increase revenue: increase users, increase screen time, or increase the likelihood that an ad converts to a purchase.

Meta’s recommendation systems are the best way to capitalize on the third variable, taking all the data they have and personalizing ads based on that data. Before spending GPUs on training Llama, this is what all those GPUs Meta purchased were for (and many of them still are):

In summary, Zuck’s personally and financially motivated to own the next platform, and he doesn’t care what price it takes to do so.

II. Meta’s Current AI Offerings

For a quick summary of Meta’s models (description from Devansh’s breakdown of Llama 4):

Scout: an efficient multimodal LLM with a 10 Million Token Context Window that can run on one GPU.

Maverick: a more powerful model (still reasonably efficient) that stomps out the “intelligent models”- GPT 4.5, 4o, Claude 3.7, DeepSeek R1, Gemini 2.0 Flash etc.

Behemoth: A 2 Trillion model that is being called best in class, but is not released yet b/c it’s still mid-training. This is used as the teacher model for the lighter ones.

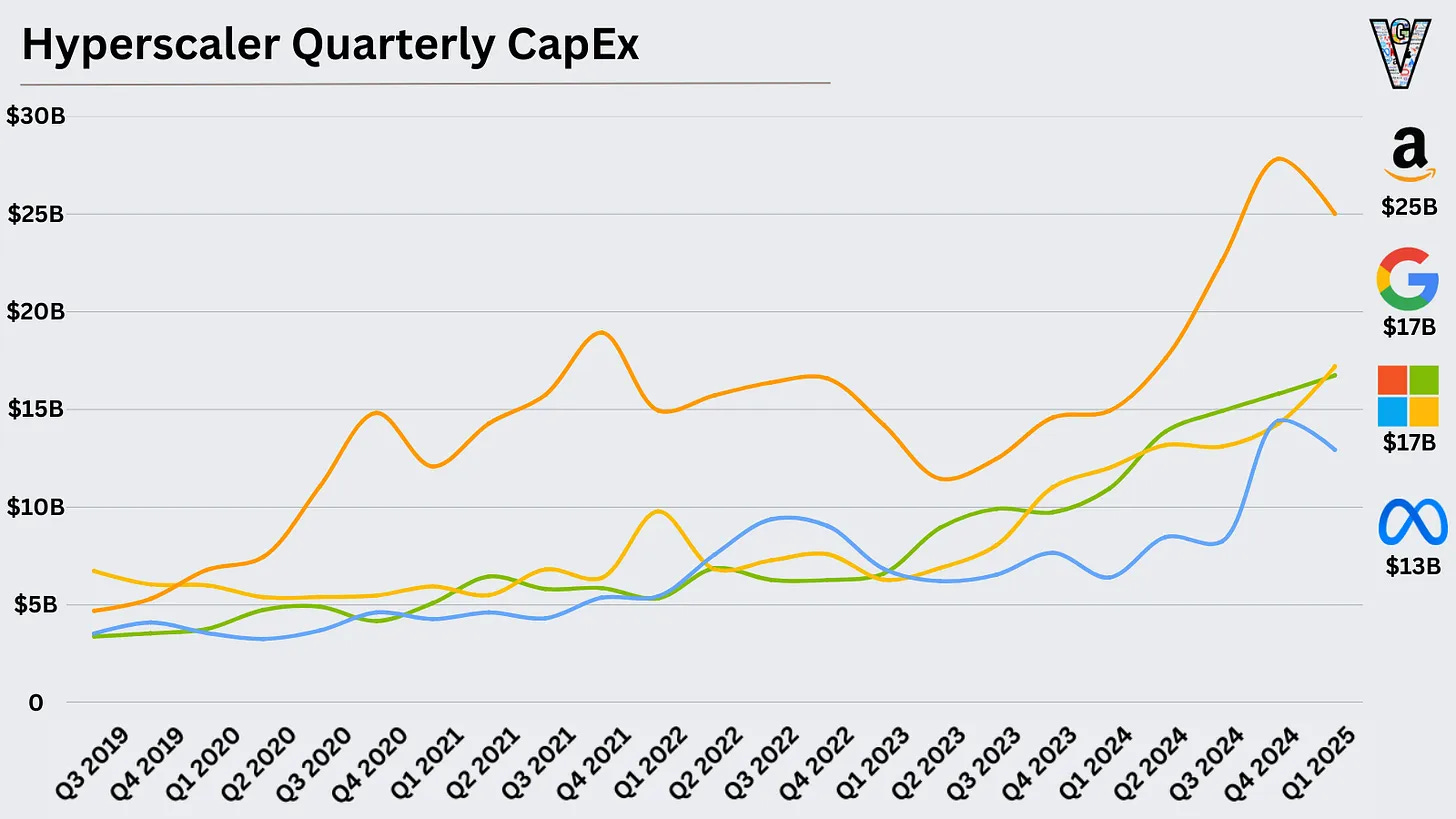

Much of their CapEx is going towards data centers and GPUs to fund model training:

These large investments are about ensuring they have control over a leading-edge model. They don’t need to profit from it because of their other profitable applications, ergo the open-source strategy. Open source provides a differentiated strategy and the open-source flywheel of community development and data to help them achieve it.

So, how are their models performing?

According to benchmarks, those models are struggling to keep up with the competition:

In LMArena, Llama 4 Maverick clocks in at 39th place on text models. According to Artificial Analysis, it’s fairly uncompetitive in terms of intelligence (logical, given it’s not a reasoning model). It does perform reasonably well on speed and price, however isn’t leading on any metric:

I want to be very clear: benchmarks can be misleading. As a friend recently said, “I never met a benchmark I didn’t like.”

But a litany of reports have suggested the same:

From the Platformer, “The company lost last year to the Llama 4 dysfunction.”

From Stratechery: Llama 4 was widely viewed as a disappointing model, and a big portion of the original Llama team has long since left Meta.

From the Information: Meta delayed Llama 4, the newest generation of its AI model, because it wasn’t performing as well on technical benchmarks as the company had hoped.

From the WSJ: Company engineers are struggling to significantly improve the capabilities of its “Behemoth” large-language model, leading to staff questions about whether improvements over prior versions are significant enough to justify public release, the people said.

This is not meant to be an indictment of Meta, I keep this newsletter optimistic! But based on reports and actions, it feels fair to conclude that Meta’s models are underperforming expectations. So why should they keep chasing the prize?

III. Meta’s Vision for AI

Zuck has described a few ~big~ opportunities for Meta in AI:

1. Creating “The Ultimate Business Results Machine”

Meta’s advertising engine has become so good that they know business’ customers far better than they do in most cases. This advertising black box is the clearest path to AI ROI for Meta.

In a Stratechery interview, Zuck described what this system would look like:

“Improve recommendations, make it so that any business that basically wants to achieve some business outcome can just come to us, not have to produce any content, not have to know anything about their customers. Can just say, “Here’s the business outcome that I want, here’s what I’m willing to pay, I’m going to connect you to my bank account, I will pay you for as many business outcomes as you can achieve.” (lightly edited for clarity)

2. AI-Generated Personalized Content

Facebook and Instagram have transformed from social media to media, as people mostly engage with content with people they’re not connected to:

Now, we may see the next evolution: personalized content generated by AI.

From Stratechery: “You can think about our products as there have been two major epochs so far. Well, the third epoch is I think that there’s going to be all this AI-generated content and you’re not going to lose the others, you’re still going to have all the creator content, you’re still going to have some of the friend content.” (lightly edited for clarity)

However, as referenced earlier, the big vision Zuck’s fighting for with these AI investments is a personalized and ever-present AI. Again, the ROI is increased engagement on the platform, leading to more advertising dollars.

3. The General Purpose AI Assistant

This is the biggest prize on the AI field right now. Viewed through this lens, OpenAI’s Jony Ive acquisition makes sense. The importance of memory becomes clear. The future is a world where you have your assistant with you at all times, and whoever owns this, has pole position on being the AI company. It’s what OpenAI, Meta, and Google are fighting for.

"There are two primary values we're aiming to deliver. On the AR and mixed reality side, our main goal is to create a strong sense of presence. The other major focus is personalized AI, which is where Llama, Meta AI, and related efforts come in. There's a lot of ongoing development to make these models increasingly intelligent, but I think things will become truly compelling when the AI is personalized specifically for you." - Zuck on Huge if True (lightly edited for clarity)

Meta’s got user data, the lead in AR/VR/AI glasses, and they’ve got a model that could be in the race for the leading edge. They’re well-positioned to compete for this prize, and it’s the one that’s got to be top of mind for Meta when making these investments.

IV. How do they achieve that vision (hint: people still matter)?

We’ve now come full circle. Meta and Zuck have the personal scars, the financial motivation, and the vision for their AI future. But how do they get there?

There are three primary vectors of improvement for AI: compute (GPUs/data centers/energy), data, and algorithms (as a proxy for human talent). The compute bottleneck has eased, and Meta’s over-acquired GPUs compared to rivals since 2023.

With the Scale investment and all the talent investments referenced above, they’re making a bet on data and on talent. But mostly on talent.

For all the talk of AI automating human labor, AI advancement is being driven by humans.

Steve Jobs told us to “Never forget the dynamic range of humans.” Well, Zuck isn’t. And it’s his best shot at achieving his vision and avoiding the same platform pains that have played out over the last decade.

As always, thanks for reading!

Disclaimer #2: The information contained in this article is not investment advice and should not be used as such. Investors should do their own due diligence before investing in any securities discussed in this article. While I strive for accuracy, I can’t guarantee the accuracy or reliability of this information. This article is based on my opinions and should be considered as such, not a point of fact. Views expressed in posts and other content linked on this website or posted to social media and other platforms are my own and are not the views of Felicis Ventures Management Company, LLC.

Interesting! Great writing

Thanks for putting this together..