A Deep Dive on Inference Semiconductors

Disruption, The Memory Wall, and Software Moats

The theory of disruption states that a disruptive technology provides a new approach to the market with significantly less functionality at a significantly lower price, targeting a market with an oversupply of performance.

I.e., the current market-leading technology provides more functionality than most customers need.

In theory, inference semiconductors fit this perfectly. They cut out every piece of the semiconductor that’s not necessary for inference, trading flexibility for performance.

With the rapidly growing inference market and the continued shift of computing time from training to inference, the inference semi providers are well positioned!

In practice, Nvidia’s innovation, iteration cycle, tens of thousands of engineers, and billions of dollars of R&D create an uphill climb for startups to compete. That said, the market opportunity is so large here that it creates a classic VC value proposition: if it works, it will work big.

The three primary challenges that companies across the landscape have to solve are:

The memory wall & scalability: how to manage the memory requirements of leading-edge models & how to build multi-chip systems to account for that.

Software & utilization: how to make chips programmable.

The GTM problem: figuring out a sustainable Go-to-Market motion (i.e. who to sell to).

A caveat: If small, customized models become the default for AI applications, this is a market unlocker for these companies, and the value prop becomes much more attractive.

The rest of this report will dive into the landscape of inference semiconductors, their technical approaches, and the problems they’ll have to overcome to achieve their goals. A preview of the market landscape here:

1. Why do we Need Inference Chips and How do They Work?

The logic is straightforward for why inference semiconductors should exist: they specialize in a narrow set of use cases, delivering performance improvements at the expense of generality.

Specialization SHOULD yield performance improvements; if the inference market is large enough, then companies should be built in the space.

The point of inference semiconductors is to cut everything out of the semiconductor that’s not necessary for inference (so they basically become matrix multiplication machines).

To understand how they achieve this, I’ll provide a quick overview of semiconductor architectures (for a deeper breakdown, you can read this excellent breakdown of Cerebras from Irrational Analysis):

At their core, semiconductors are defined by:

Raw compute power (number of cores and power of cores)

Flexibility (the variety of tasks a chip can execute)

Memory capacity and how the cores access it (memory hierarchy)

Other variables matter, like how they communicate with other chips and how software interacts with them as well, but to keep it simple: it’s compute power, flexibility, and memory management.

Traditionally, CPUs are made up of a small number of highly capable cores that can execute a wide variety of operations. GPUs, on the other hand, have a massive amount of cores that can only execute simple operations.

For AI semiconductors, memory hierarchy is especially important:

Within each core, chips have registers that store very small amounts of information. For example, they may store input data that will be immediately calculated. Then they have SRAM, another form of on-chip memory.

On-chip memory has the lowest latency and consumes the least power. For reference, Google’s TPUs use 200x more energy to access data off-chip than on-chip. The problem is there’s only so much room on the semiconductor! So, the more on-chip memory there is, the less computing is available.

So, all other data has to be stored off the semiconductor. For GPUs, high-bandwidth memory, or HBM, is in high demand because it offers the lowest off-chip memory latency. We can see how an Nvidia GPU would access memory here (the SM is a compute core):

This can get dense, but it’s important. Really important!

GPUs need to be used for both training and inference.

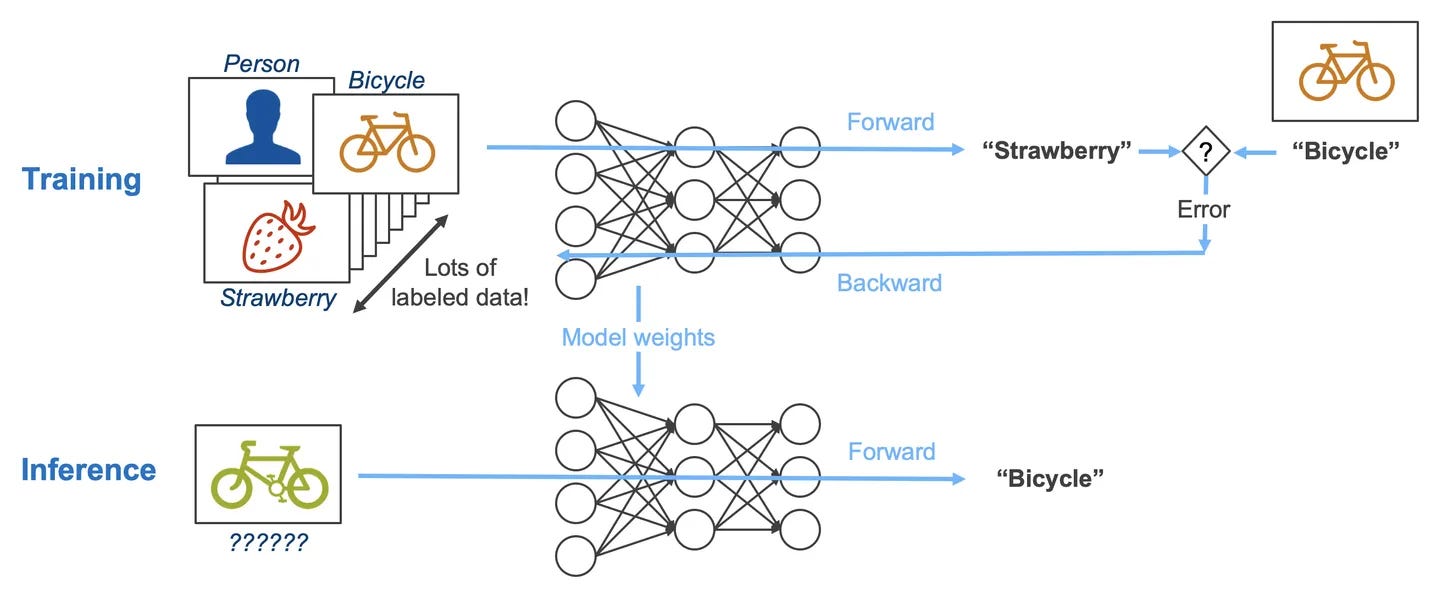

Training tends to be more complex than inference. The goal of training a neural network is to most accurately represent a given data set (which resembles a real-world phenomenon you’re modeling). It requires forward passing and backward passing of data, calculating derivatives for parameters in the model, and constantly updating model weights.

It requires more flexibility for these varied operations.

Inference, on the other hand, is a simper workflow built around matrix multiplications.

At a circuit level, this means inference semiconductors need a lot of multiplication and addition capability (fused multiply-add circuits, for example). Many inference chip approaches cut out control logic (parts of the semiconductor that give instructions to the circuits) to maximize the amount of these circuits. Meaning that they’re much less flexible but much more capable for inference.

FuriosaAI has shared a good analogy comparing SpaceX to inference semiconductors: they’re building from first principles, cutting out everything that’s not inference-specific, much like SpaceX did in building rockets by simplifying rockets and cutting out as many unnecessary costs as possible.

I’ll call out that not all AI chip companies are hyper-specializing for inference. Tenstorrent, for example believes the future of AI is mixed workloads with CPUs and GPUs. They still include control logic but have built a semiconductor designed for AI from the ground up.

The “baby RISC-V CPUs” act as control logic.

In summary, GPUs are more powerful, have more memory, and are more complex. Inference chips cut out the “unnecessary stuff” to be as efficient as possible. In theory, the TCO should be better for inference-specific semiconductors.

2. The Inference Semiconductor Landscape

Now that we have the theory in place, let’s discuss, at a high level, the various approaches companies are taking in this space.

Here’s a map of some of the approaches across the landscape:

There’s quite a diverse set of approaches, even within each of these boxes, so take the “labeling” with a fistful of salt.

In reality, Nvidia has a near monopoly on training, so most of these chips are targeting the inference market. Dylan Patel shared that Nvidia has approximately 97% market share if we remove Google’s TPUs from the equation (70% market share with TPUs). The majority of the remaining 3% comes mostly from AMD’s revenues. This is fairly consistent with estimates reported by Next Platform last year.

So, inference-focused chips haven’t seen mass adoption yet. In large part, this is because of Nvidia’s moats in software and networking. We can see the amounts raised by some leading startups here (note: funding rounds often don’t get announced for many months after the deal is closed).

Instead of doing a deep dive into each of these companies, I’ll discuss the problems everyone’s trying to solve!

3. The Memory Wall & Scalability

I alluded to the memory problem earlier, but the core idea is that AI workloads are not compute-bound; they’re memory-bound. Positron AI’s founder gave a great talk on the economics of AI and the memory problem. In it, he explained how transformers are a more memory-intensive operation than previous AI workloads:

The basic problem is that the size of models has rapidly outpaced the memory available on chips:

For example, Llama’s 405B requires about ~800GB of memory to be served. So, no single chip can run inference for these models. To only use on-chip memory, we need a lot of inference semiconductors! For example, Groq linked 576 of its chips together to run Llama 70B.

Because of this, many chips need to be linked together, and the model will be partitioned across all those chips.

This makes scalability really important.

It’s why Nvidia has spent so many resources on custom networking, and it’s a key piece of Nvidia’s moat. You can ~relatively~ easily string together thousands of Nvidia GPUs and run leading-edge models on them.

It’s also why processing-in-memory chips have recently gotten more attention. PIM essentially places memory and computing in the same cell, removing the need to go off-chip for memory.

d-Matrix, for example, recently announced their first commercial chip and 20x faster time-per-output token compared to Nvidia’s H100. When they stay on on-chip memory, their performance is really good! Naturally, when they move to off-chip memory, it declines rapidly.

FuriosaAI has taken a unique approach as well, with an approach called “tensor contraction processing.” Most AI accelerators rely on 2D matrix multiplication, but the base unit of LLMs tensors (the same “tensor” in Google’s Tensor Processing Units) come in multi-dimensional matrices. So Furiosa designed a chip to process multi-dimensional matrices, not just 2D matrices.

The way AI semiconductors access memory is one of the key architectural differences between the various approaches on the market. So memory management and scalability are vitally important in building chips that can compete with Nvidia. The second important variable is being able to use the chips at all!

4. Software and Utilization

Purchasing hardware comes down primarily to the Total Cost of Ownership. You want the highest return on (compute capacity * utilization) / (capital expenditures + operating expenditures + time/effort). (My equation may not get accepted into any technical conferences, but the gist of it’s there!)

While peak FLOPs are advertised, this rarely occurs. Part of this is the memory problem, but a huge part is being able to orchestrate tasks that efficiently run across many chips.

Translated: software matters a lot!

This is at the core of Nvidia’s CUDA moat. They’ve spent 15+ years building out CUDA and the community of developers who know how to use it. They’ve built libraries and functions specialized for every major AI workload. It’s really hard software to build, and no one else has cracked the code. See SemiAnalysis’ last post on AMD vs Nvidia if you need any more proof!

I referenced the problem earlier, but some ASICs also remove most of the control logic, which means instructions have to be compiled in detail ahead of time or risk severe utilization.

The problem with cutting out control logic is it creates a huge burden on ahead-of-time compilation. Compilation is the process of translating code into instructions that can be processed by hardware. This means that oftentimes inference semiconductors require expert compilers to carefully plan how tasks are split up and assigned to the cores, sometimes manually writing assembly code to do so.

This can be a challenging sell to customers, but what if you don’t have to sell the hardware?

Groq, Cerebras, and SambaNova are all offering cloud-based inference; this bypasses the need for customers to program their own hardware. If it works, it allows customers to take advantage of the inference-specific performance improvements without worrying about the compiler challenges.

For inference semiconductor designers to become very large companies, they’ll need to build great software that makes it easy (possible, even) to effectively use their hardware. And that’s much easier said than done.

5. The GTM Problem

Now that we have a lay of the land, you’ll notice a distinct tone of the article. Everything is compared to Nvidia. And that’s for a reason. They’re the undisputed king of the hill.

This article is really a “the approaches that COULD be a viable alternative to Nvidia.” It’s not just a technology problem; it’s a GTM problem.

Semiconductor startups have an uphill climb in figuring out their customer base. If we look at the options for companies to buy compute infrastructure:

Buy new Nvidia hardware for inference

Use existing Nvidia hardware for inference

Buy AMD or another chip companies’ hardware

Rent hardware from a GPU cloud or hyperscaler

Buy hardware from a startup

Typically, companies buy through OEMs like Dell or Supermicro, so that provides another layer of “convincing” required.

If you’re a hyperscaler, you have another option! Use the semiconductors you’ve been developing in-house and have spent billions on! The saving grace here is that the hyperscalers do want Nvidia alternatives, and they’ve invested (through their venture arms) in many of the semiconductor startups.

Early partnerships are critical for young chip companies, and it typically takes several iterations of a product to hone in a value prop that appeals to the masses.

Austin Lyons has a great framework breaking this down through the lens of Etched.

Semiconductor startups essentially go through the phases of concept validation, market prep, commercial scaling, and finally, a sustainable roadmap:

The problem is the fact that you have to raise hundreds of millions of dollars to build a semiconductor company. But to raise that capital, you have to convince investors there’s an ROI on that investment. To do that, you need proof of customers, which is hard to get without a product. You see where I’m going with this.

The point is that it’s really hard to find customers and scale a semiconductor startup. The benefit is that if it does work, it should work big.

As alluded to earlier, the final GTM path is vertical integration. If chip companies genuinely achieve performance benefits from their hardware, then they can offer an inference cloud service that allows them to go directly to end users! They build their own software, racks, servers, and chips. They achieve the cost benefits of vertical integration and prove the superior performance of their chips.

Through this, they can also prove to potential hardware customers the effectiveness of their hardware.

6. Some Final Thoughts

If there’s anyone left reading (surely there’s someone…right?), I’ll give my summarized thoughts on the market:

Nvidia’s the king of the hill for a reason.

If one company carves out a market, I think several will.

I’m rooting for these companies.

Nvidia has a near monopoly on AI chips, and they’re still running like there’s a crew of townspeople with pitchforks behind them. They’ve moved to an annual cadence of product releases, with tens of thousands of engineers and billions of dollars of investment, in addition to their existing advantages of great hardware, networking, and software.

Tackling them head-on is really hard. If any company can carve out a niche in this market, it can become a very large company. It’s a value proposition much like the early days of venture capital (named after adventure capital!) If it works, it should work huge.

In my view, if one company proves they can win in this market, several are likely to win. If we look at the end use of AI applications, their needs vary. The question is: What do AI applications demand and how to these different approaches solve them?

If they need immediate responses for certain applications, chips focused on speed like Groq make sense. Some applications may need to process many users at once, so chips that optimize for total throughput (concurrent users * processing speed) make sense.

I’m particularly interested in approaches that are disruptive to the AI chip stack as a whole. Companies across the inference landscape have elements of disruption. Approaches to create model-specific ASICs faster, like Taalas, are particularly interesting.

Semiconductors are cool again. I think they’re the most important industry in the world. The more competition, the better.

If you’re working in this ecosystem, feel free to reach out. I’d love to hear your perspective on the market.

As always, thanks for reading!

Disclaimer: The information contained in this article is not investment advice and should not be used as such. Investors should do their own due diligence before investing in any securities discussed in this article. While I strive for accuracy, I can’t guarantee the accuracy or reliability of this information. This article is based on my opinions and should be considered as such, not a point of fact. Views expressed in posts and other content linked on this website or posted to social media and other platforms are my own and are not the views of Felicis Ventures Management Company, LLC.

Thanks for the write-up. I'm keenly interested in watching this market develop over the next few years. If we really do get AGI, or something very close to it, I can imagine that the compute associated with inference will be so unbelievably large that this industry has a decade of incredible growth ahead of it.

What are your thoughts on AMD dominating the Inference market? With the Xilinx acquisition, the FPGA technology and some of the best CPU architecture in the business. Lisa Su has claimed their chips are the best in the market when it comes to inference and Meta has already made official that they are using exclusively MI300s for their Llama inference requirements.

Possibly a $130B+ opportunity for AMD in the coming years