AI as Electricity

Some thoughts on the right analogy for AI

“There are, perhaps, few things finer than the pleasure of finding out something new. Discovery is one of the joys of life and, in our opinion, is one of the real thrills of the investment process. The cumulative learning that results leads to what Berkshire Hathaway Vice-Chairman Charlie Munger calls “worldly wisdom”. Worldly wisdom is a good phrase for the intellectual capital with which investment decisions are made and, at the end of the day, it is the source of any superior investment results we may enjoy.” - Nick Sleep, 2010 Annual Letter

In pursuit of “worldly wisdom,” I’ve been thinking about where we may be headed with AI and the right analogy that hints at that future.

While there’s no shortage of analogies for AI (I’m sorry for contributing to the problem), I heard Jeff Bezos compare AI to electricity, and I think he’s right. Through my studies of data centers and the electric grid, there have been hints of this analogy. As promised in my annual letter, I’d like to add more substance to that theory.

Now, every analogy has glaring logical holes (this one is certainly no exception!) So, I’ll call out the three parallels I think are most relevant.

AI Data Centers & the Early Electric Grid

GE & Vertical Integration

The Utilities of the AI Age

For those in a hurry, the key analogy is this: (1) The price of intelligence is dropping rapidly like the price of electricity, unlocking more use cases for intelligence/electricity. It compounds on itself: more applications drive more usage (bigger market) which drops costs which unlocks more applications. Oh, and vertical integration is an important competitive advantage if done correctly.

For those looking for a bit more worldly wisdom, feel free to read on.

If you’re curious why I’m writing about this (a divergence from my typical industry/company deep dives), there are two reasons:

It’s easy to fall into “data collection” mode as investors, but spending some time wandering in thought is well spent! As Nick Sleep told us: “In today’s information-soaked world there may be stock market professionals who would argue that constant data collection is the job. Indeed, it could be tempting to conclude that today there is so much data to collect and so much change to observe that we hardly have time to think at all. Some market practitioners may even concur with John Kearon, CEO of Brainjuicer (a market research firm), who makes the serious point, “we think far less than we think we think” - so don’t fool yourself!”

Perhaps more importantly, information is commoditized, while wisdom is becoming scarcer. Something to think about, if you will. Consider this my call to action, to (attempt to!) provide more wisdom and a little more of my thoughts in this newsletter (as much as readers can bear to hear, I suppose).

Thirdly, I was reading Confessions of an Advertising Man, and David Ogilvy said, “You can’t bore people into buying.” While it’s abhorrent to say semiconductors and data centers are boring, it can’t hurt to throw a lighter read in once in a while!

Back to our regularly scheduled programming.

1. The AI Data Center Buildout

The US electric grid was initially built out 100+ years ago, and it’s remarkably similar to today’s AI Data Center Buildout.

I first noted the resemblance between the electric grid buildout and the AI data center buildout last year:

The scaling of power plants: From 1890-1920, electric companies built the largest power plants they could. Relative performance increased as power plants grew, so electric companies supersized their power plants, much like the mega data centers of today.

“Astronomical” CapEx investments: In 1900, it was estimated that the electric industry would need $2B (or $62B in today’s dollars) over the coming five years to meet demand. As Construction Physics describes, “As one financier observed at the time, the amount of money required by the burgeoning US electrical system was “bewildering” and “sounded more like astronomical mathematics than totals of round, hard-earned dollars.”

The plummeting cost of electricity: Much like the cost of compute today, electricity prices dropped precipitously with scale. These price decreases led to the conclusion that electric utilities were “natural monopolies,” meaning that electricity was a race to the bottom and that the largest providers with scale would dominate the market.

Much like others infrastructure buildouts, these investments are both expensive and necessary. The upfront costs eventually lead to the dropping cost of service over time. This is what happened with electricity and it’s what happening to inference today.

The difference is that inference costs have decreased by ~90% over the course of 18 months. It took decades for it to happen to electricity.

2. GE & Vertical Integration

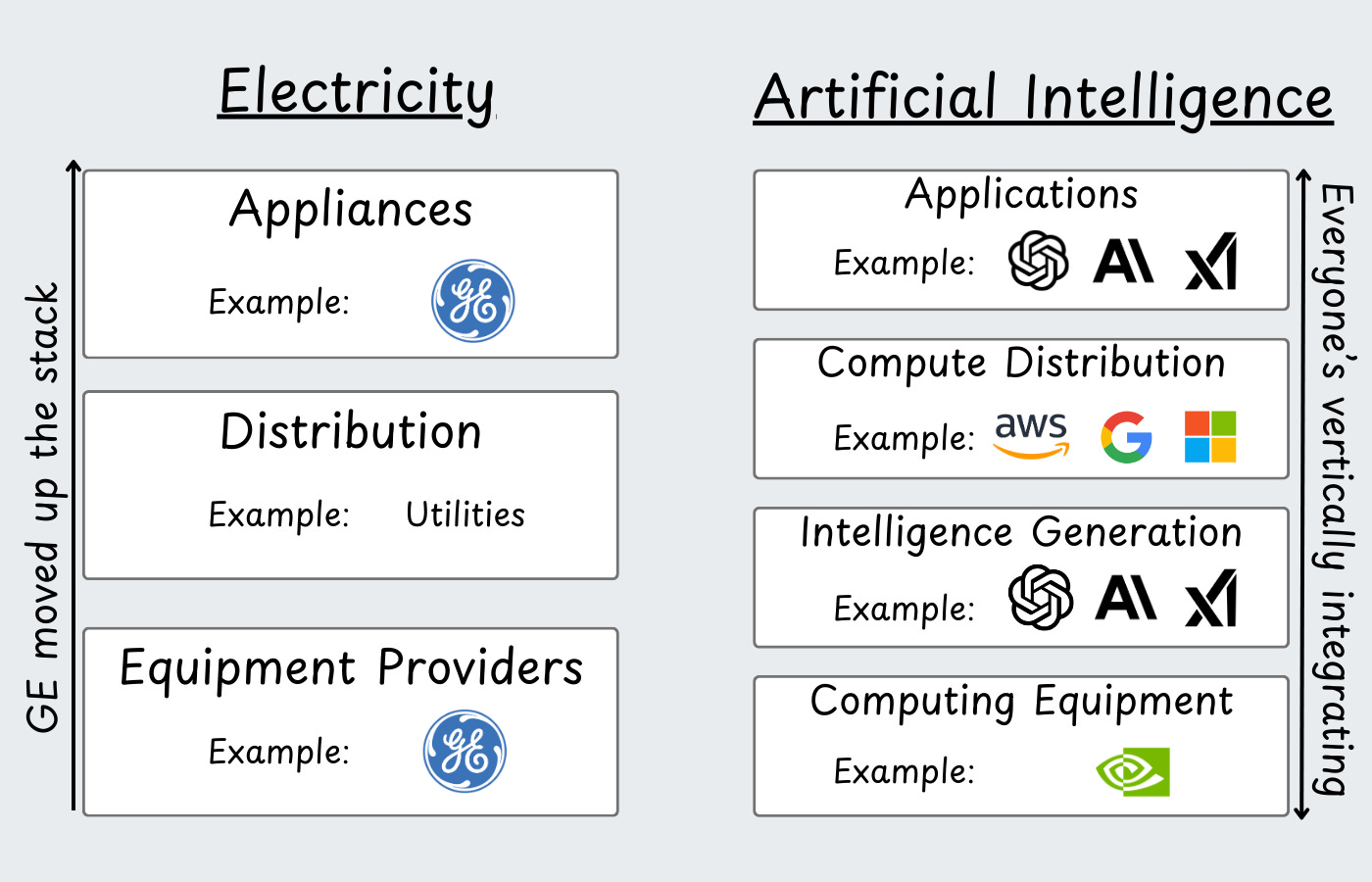

The infrastructure buildout is the first parallel, the pursuit of vertical integration is the second.

Thomas Edison unlocked the first great use case for electricity with the lightbulb. He then helped pioneer the early electric grid and the entire energy industry.

GE would dominate power generation in the early 1900s, becoming something like the Nvidia for power - providing the turbines, motors, and electrical equipment for the burgeoning electric industry.

That market growth began to slow as the US became electrified:

GE was a cash cow and needed expansion (sound familiar?). In 1917, Sears released an advertisement that said “Use your electricity for more than light.”

And that they did.

The US saw the golden age of appliances ushered in that would last for ~50 years. GE expanded up the stack to appliances, and solidified their position as one of the giants of American industry.

We’re seeing a very interesting trend among the AI companies of today. Nvidia’s expanding to models and software. OpenAI’s reportedly designing their own chips, while already competing in the model and application layer. The hyperscalers are completely vertically integrated (at least in theory).

The one exception to GE’s vertical integration (over the long term) is that utilities ultimately owned the distribution of electricity while GE provided the equipment and, eventually, the applications.

3. The Utilities of the AI era?

Perhaps the most interesting question in this analogy is: who becomes the utilities of the AI era?

First, we have to view computing as a utility for the logic to hold up. We have historical precedent!

In the early 1970s, “timesharing” companies like University Computing Company had a huge boom (and ensuing bust) on the idea that you could split up computers and allow many people to use them at once. The Engines that Moves Markets describes the time,”The commercial focus of the time was therefore on ‘utility computing’ and this was reflected in the financial markets.”

IBM was talking about computing as a utility in the early 2000s. See this quote from a 2005 “Intro to Grid Computing” Handbook:

One other type of grid that we should discuss before closing out this section is what we will call e-utility computing. Instead of having to buy and maintain the latest and best hardware and software, with this type of grid, customers will have the flexibility of tapping into computing power and programs as needed, just as they do gas or electricity.

They were calling the cloud “e-utility computing”! Catchy!

Of course, the cloud became the default way to access compute power, and the hyperscalers became something like “computing utilities.”

The question then becomes: who distributes AI and who manages the customer relationships?

Clearly, the hyperscalers are well positioned to be the “distributors of AI” as well. I’d argue a company like Cloudflare fits the description well, and even the new age of inference providers look like they could fill that position! These companies all own the computing equipment, operate it, and distribute it to customers.

4. So why this diatribe about electricity and AI?

If the electric industry is a good guide, we’ve just started to see AI applications being built.

It took many decades for the infrastructure to be laid for the electrical industry to be ready for the appliances.

With AI, the infrastructure is already in place. We’ve got the physical infrastructure, the cloud to distribute compute, the software infrastructure to build software, and we have the software applications to integrate AI into!

Jeff Bezos gave a TED talk in the ruins of the dot-com bubble in 2003, and shared this quote:

“If you really do believe that it’s the very, very beginning, if you believe it’s the 1908 hurley washing machine, then you’re incredible optimistic. And I do think that’s where we are. And in 1917, Sears - I want to get this exactly right. This was the advertisement that they ran in 1917. It says: “Use your electricity for more than light.” And I think that’s where we are. We’re very, very early.”

The thing with innovation is that it’s never been good to bet against it. In 2003, Jeff Bezos was right; he was just early, and it took a long time to achieve that vision. For AI today?

I think that’s where we are. We’re very, very early.

As always, thanks for reading!

Disclaimer: The information contained in this article is not investment advice and should not be used as such. Investors should do their own due diligence before investing in any securities discussed in this article. While I strive for accuracy, I can’t guarantee the accuracy or reliability of this information. This article is based on my opinions and should be considered as such, not a point of fact. Views expressed in posts and other content linked on this website or posted to social media and other platforms are my own and are not the views of Felicis Ventures Management Company, LLC.

Guest post this. You don't have a choice. My cat commands it

Don’t forget that we also have the raw materials for these data centers! Electrons and behavior data!

That was a great read!