Q2 ‘25 Cloud Update: CapEx Keeps Rising!

Market Share, CapEx, Why Azure's Taking Share, and Why AI Isn't Shaping up like SaaS

The question at the crux of the AI ecosystem is supply and demand: How much compute is available via GPUs, data center, and energy? And how much demand is there from applications of AI for that compute?

The hyperscalers provide the best insight into that. When I was working at Microsoft, I was constantly reminded just how broad its reach was. I mean, every company in the world seemingly uses Microsoft (that’s actually not true, its something like one million enterprises, but the point stands).

The tech world is built on the hyperscalers (which in turn, are built on the data center and semiconductor ecosystem they source from).

These are the most important businesses in technology. They're the largest demand generator for the semiconductor ecosystem. They're the largest vendor to software companies. They're the utilities of the computing age.

Now, they’re driving the majority of the spending on AI CapEx and supplying much of the compute to the AI labs and startups building the next supercycle of tech.

It’s why the one dedicated quarterly article I write on Generative Value is an update on their businesses:

Revenue & Performance

Market Share

Five Observations on the State of the Cloud

So while I spend 90+% of my time thinking about what the largest tech businesses will be in a decade, the hyperscalers justify a dedicated article.

On to the update.

I. Market Share & Statistics

For those that prefer cold hard numbers over commentary, feel free to look at these graphs and move on with your day! A reminder that these are my personal estimates based on 10Qs, but some assumptions have to be made around Azure’s historical numbers and Google Cloud, including GSuite in their numbers.

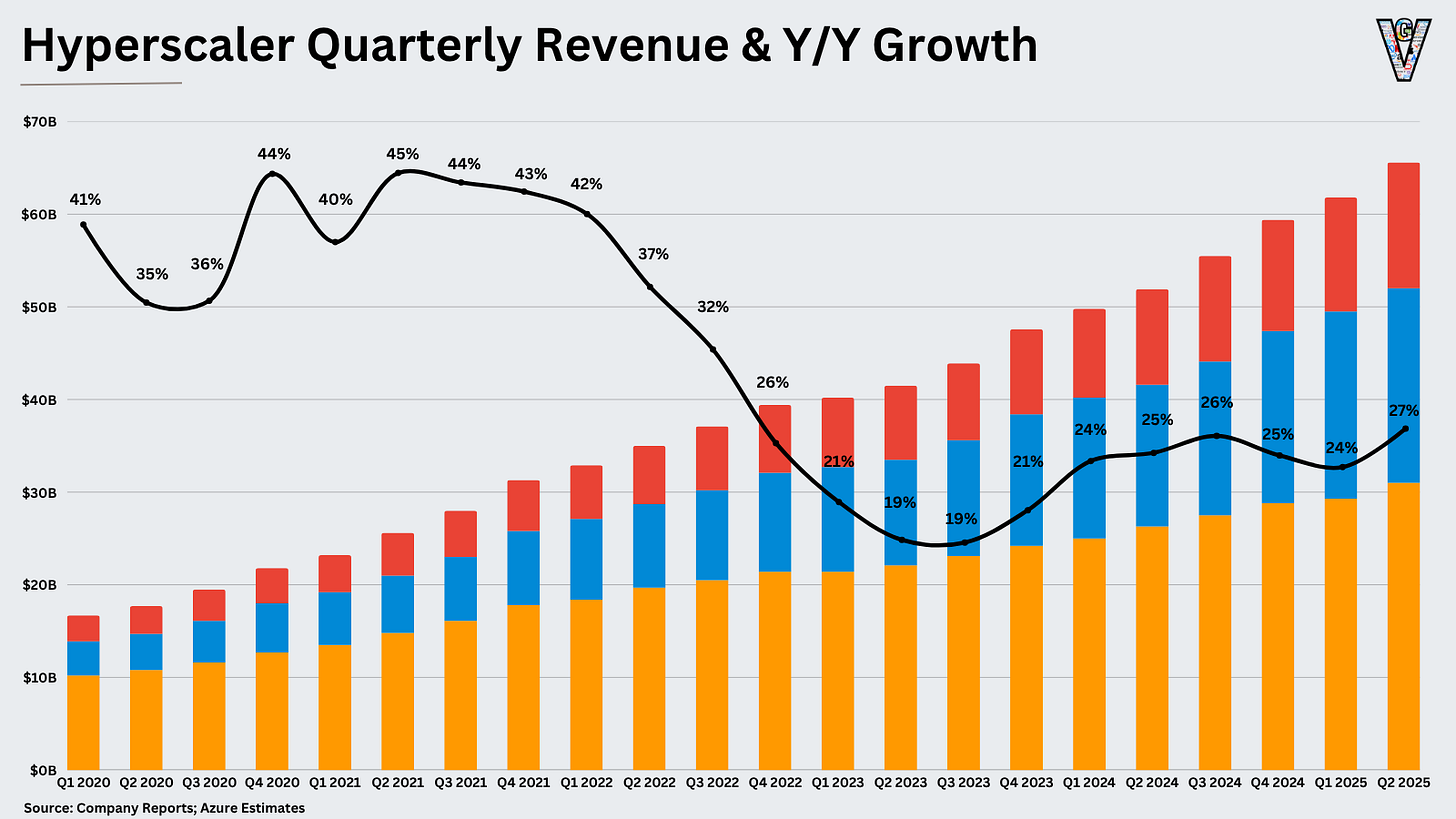

AWS generated revenue of $30.9B, up 17.5% Y/Y. Azure generated roughly $21B in revenue, up 39% Y/Y. Google Cloud generated $13.6B in revenue, up 32% Y/Y.

Combined, the three cloud giants hit a $262B revenue run rate, growing 27% Y/Y.

Oracle is also rapidly ramping up CapEx, up to a projected $27B in 2025 according to Semianalysis. They also project nearly $40B in GPU cloud revenue run rate by 2027, which would give them meaningful market share. Perhaps I’ll include them in next quarter’s update.

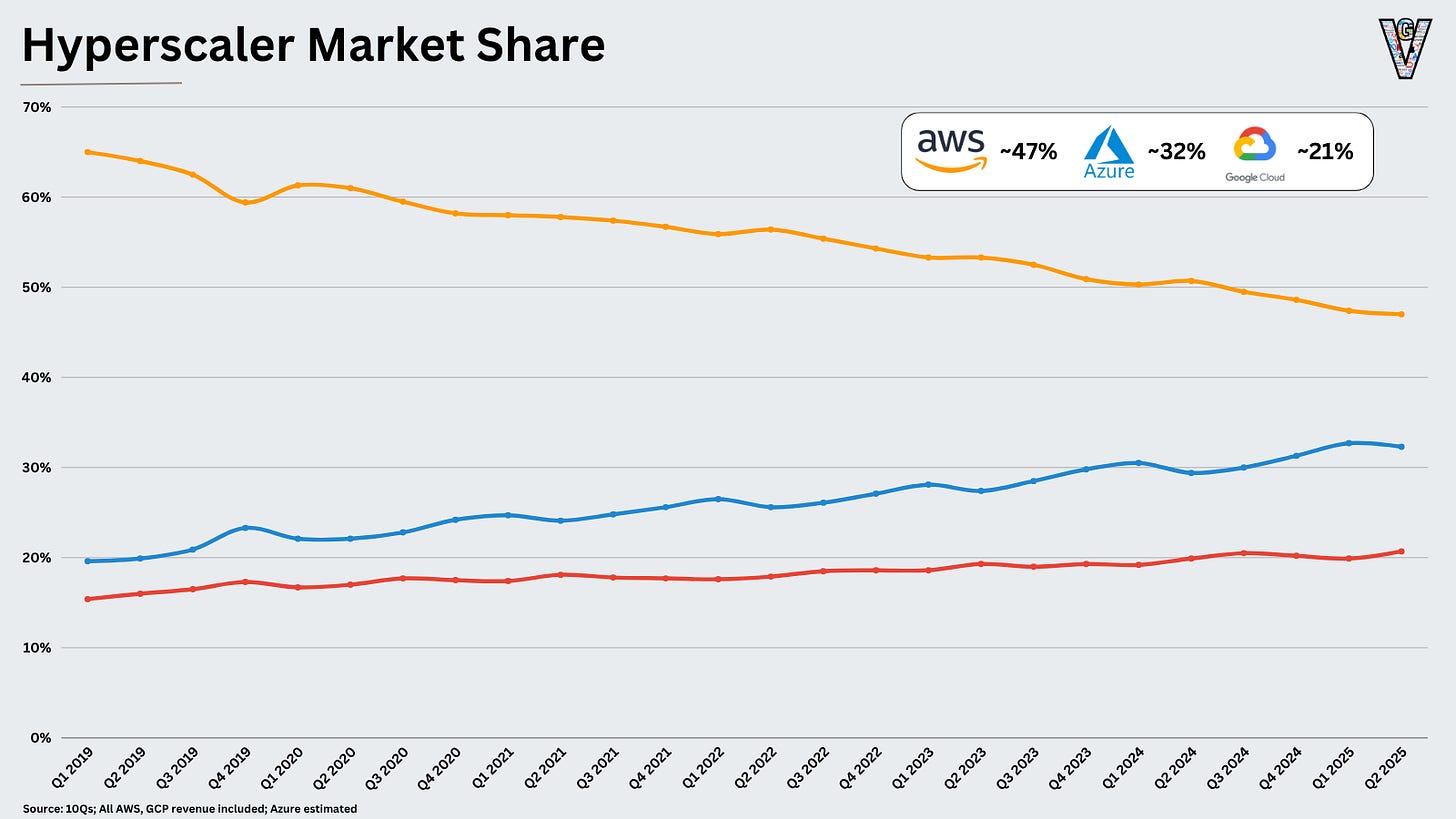

Based on those revenue numbers, that translates to the following market share numbers:

A more interesting view of market share is by net new revenue generated over the last four quarters. Of all the revenue generated in the last year by the hyperscalers, what percentage of share did each take?

Consider this a gauge of “market momentum”, or where market share is headed.

So on to my observations from the quarter, starting with the one most on everyone’s mind: how in the world is Azure growing 39% Y/Y at a $75B run rate?

II. Five Observations on the Quarter

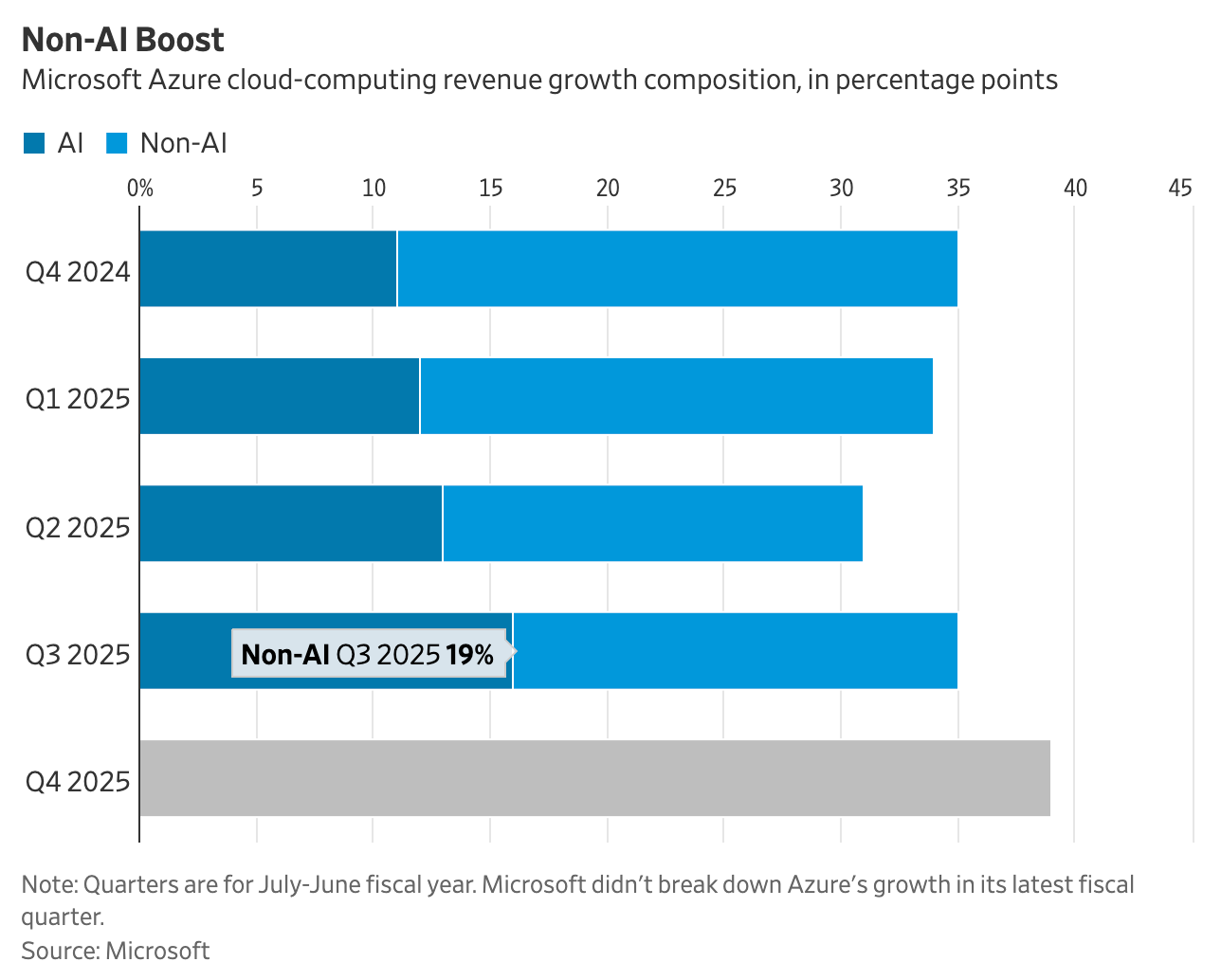

AI is driving Azure’s outperformance.

Let’s revisit the chart above. In three years, Azure has moved from generating ~30% of annual net new cloud revenue to ~43%. They’ve accelerated growth from 27% two years ago to 39% this quarter.

To start, they will write case studies on Satya’s execution with AI. It’s led to the biggest differentiator in their cloud business today. First, because of their partnership with OpenAI, they can offer the OpenAI API directly through Azure. To my knowledge, OpenAI is the only other API provider of OpenAI’s models.

Second, they’re the primary cloud provider to OpenAI, which could grow into the world’s largest cloud customer in the coming years. Note that Azure lost exclusive provider status after not being able to deliver enough capacity, but they’re likely still the largest OpenAI cloud provider by far.

A large % of Azure’s growth has been driven by these AI workloads over the last few quarters:

If you include Microsoft’s share of OpenAI today (reportedly around ~33%), Microsoft’s investments in OpenAI will result in hundreds of billions in value creation.

When you add on the power of Microsoft’s distribution network (their relationship with nearly every enterprise in the Western World), it’s an incredibly powerful combination that’s challenging to compete with.

So yes, Azure’s taking share, but all three providers continue to do very well, especially at their size. Growing these businesses is priority number one for the hyperscalers; a large part of that is growing the AI business, on to point number two.

AI applications continue to grow ~rapidly~ and exceed (their already lofty) expectations.

The hyperscalers are in the unenviable position of trying to predict how much supply the AI ecosystem will need over the next decade, and matching their investments to meet that demand. When in doubt, they’ll err on the side of overinvesting to ensure they don’t miss the current land grab moment.

So they’re closely watching demand signals to see if they need to decrease, maintain, or increase investments. And those demand signals are a screaming buy right now.

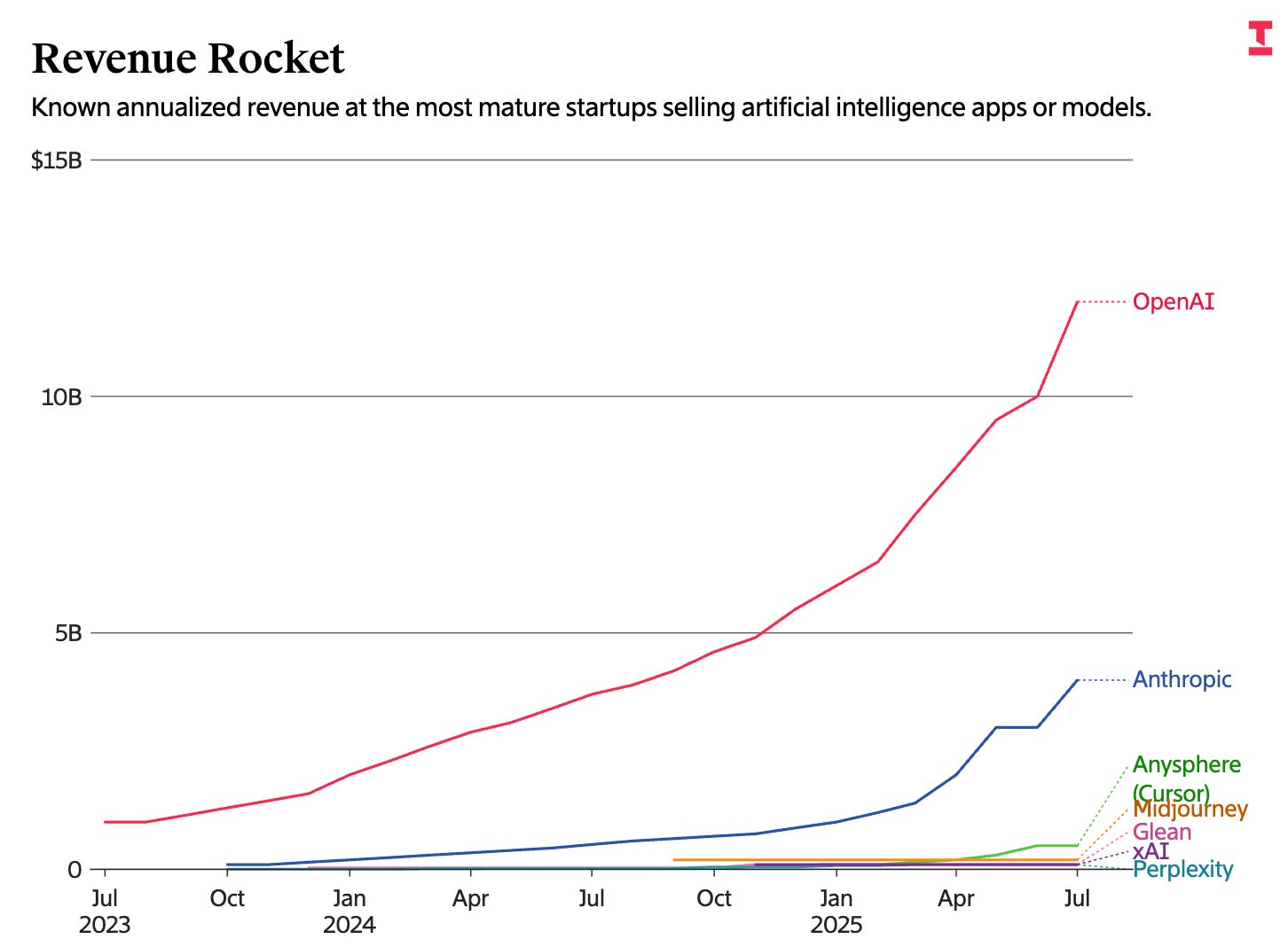

Take a look at some of these recent (reported) stats from AI model and application companies:

OpenAI roughly doubled its revenue in the first seven months of the year, reaching $12 billion in annualized revenue. They expect that number to hit $20B by the end of the year.

Anthropic hit a roughly $4B run rate at the beginning of July…by the end of July, that number had grown to $5B. They expect that number to grow to $9B by the end of the year.

Cursor hit a $500M revenue run rate, in roughly two years of selling.

Lovable grew from $1M-$100M in 8 months.

ServiceNow expects to hit $1B in AI revenue in 2026.

We can see some of those numbers visualized from The Information here:

For all the questions around AI today, there’s no question about the growth rates of these companies!

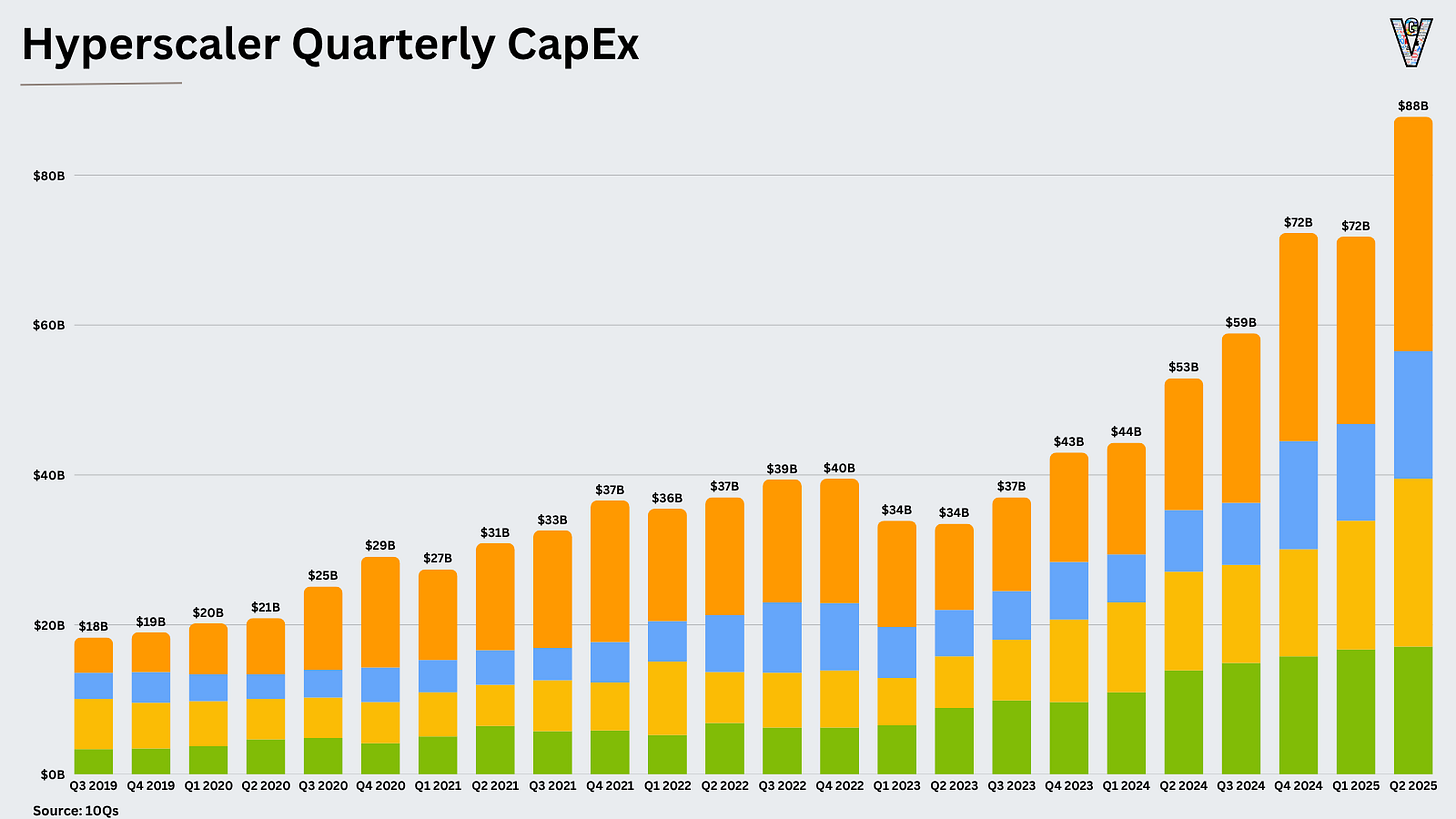

Those demand signals are driving more and more CapEx.

So the hyperscalers are basing their investment decisions off those demand signals, which are telling them to keep spending. Because there's a land grab to be the cloud provider to support these AI applications, the hyperscalers will invest whatever they need to win those early AI application workloads.

If we look at the early history of AWS, much of their early dominance came from the fact that they built a near monopoly on digital native & SaaS business building on the cloud. While Microsoft focused on the enterprise, AWS built mindshare in this “small market.”

But as I keep bringing up: an early monopoly on a rapidly growing market is a dangerous recipe!

The hyperscalers want to do everything they can to support the fastest-growing AI application startups. This is reflected in the continued "foot on the pedal" capital expenditures:

Despite these investments, the hyperscalers remain capacity-constrained, as noted by all three providers. Interestingly, they also called out the changing “shape” of CapEx investments.

These CapEx investments are changing shape, shifting towards inference and more scalable workloads.

Both Meta and Microsoft made comments along the lines of "We also expect a greater mix of our CapEx to be in shorter-lived assets in 2025 and '26 than it has been in prior years."

From my understanding, this means they're spending less on physical data center buildouts and more on shorter-term assets like GPUs, networking, and storage.

This tells us two things: (1) they've made progress in physical buildouts, (2) they’re derisking their CapEx investments as it becomes increasingly risky to invest in 15-year assets at the latter part of an investment cycle, and (3) they want their CapEx to be catered towards inference and as scalable as possible. I called this out in last quarter's update, and we're seeing it come to fruition:

Inference, as the enabler of applications, resembles the recurring SaaS revenue model of the last decade. The hyperscalers will do everything they can to power those applications.

Training, on the other hand, requires larger, upfront investments. As Zuck described, “I’d guess that in the next couple of years, the training runs are going to be on gigawatt clusters, and I just think that there will be consolidation.” These large-scale expenses present a less attractive risk-reward profile.

The last two years have come with a lack of clarity on the future of AI. In the absence of clarity, the biggest risk was underinvesting.

However, as the economics of AI come into focus and these infrastructure investments unlock AI applications, the hyperscalers can focus on investing in a future that provides them the best ROI per dollar deployed with the least risk of overinvestment. Risk-adjusted AI, if you will!

If it’s not clear, I’m overwhelmingly positive on the short-term and long-term economic characteristics of these businesses. However, I will share the biggest question for their businesses (in my opinion) over the next decade.

How different does the value chain in an AI world look from the value chain in a SaaS world?

The biggest question for the hyperscalers is not that they lose market share, but that the value chain shifts at the expense of the hyperscalers, in favor of the foundation model and app companies.

If we look at the rise of the SaaS wave in the 2010s, the largest early driver of AWS's success was digital natives like enterprise SaaS companies and consumer tech companies.

These SaaS products were essentially a set of "database and compute" wrappers. As the primary provider of database and compute, AWS became the infrastructure that captured the majority of value.

Today, though, the primary infrastructure for AI applications are the models themselves, i.e. the largest applications being “LLM Wrappers.” The AI labs are assuming the position of both infrastructure and application providers, sitting on top of the hyperscalers.

So while OpenAI and Anthropic are a fraction of the hyperscalers' size today, they could grow to match their significance over the next decade. As I said at the beginning, the hyperscalers give us the best insight into both supply and demand of AI. And those dynamics between the hyperscalers and its AI suppliers (Nvidia) and its AI demand generators (OpenAI, Anthropic, etc) will be fascinating to watch.

As always, thanks for reading!

Disclaimer: The information contained in this article is not investment advice and should not be used as such. Investors should do their own due diligence before investing in any securities discussed in this article. While I strive for accuracy, I can’t guarantee the accuracy or reliability of this information. This article is based on my opinions and should be considered as such, not a point of fact. Views expressed in posts and other content linked on this website or posted to social media and other platforms are my own and are not the views of Felicis Ventures Management Company, LLC.

Great read

Great article! I don’t think this AI Spend will ever stop. The beginning of the year with those Microsoft warnings , a pullback in CapEx ai spending sounded like it was coming for Hyperscalers. That ideology from just 6 months ago feels like a half a decade ago